|

|

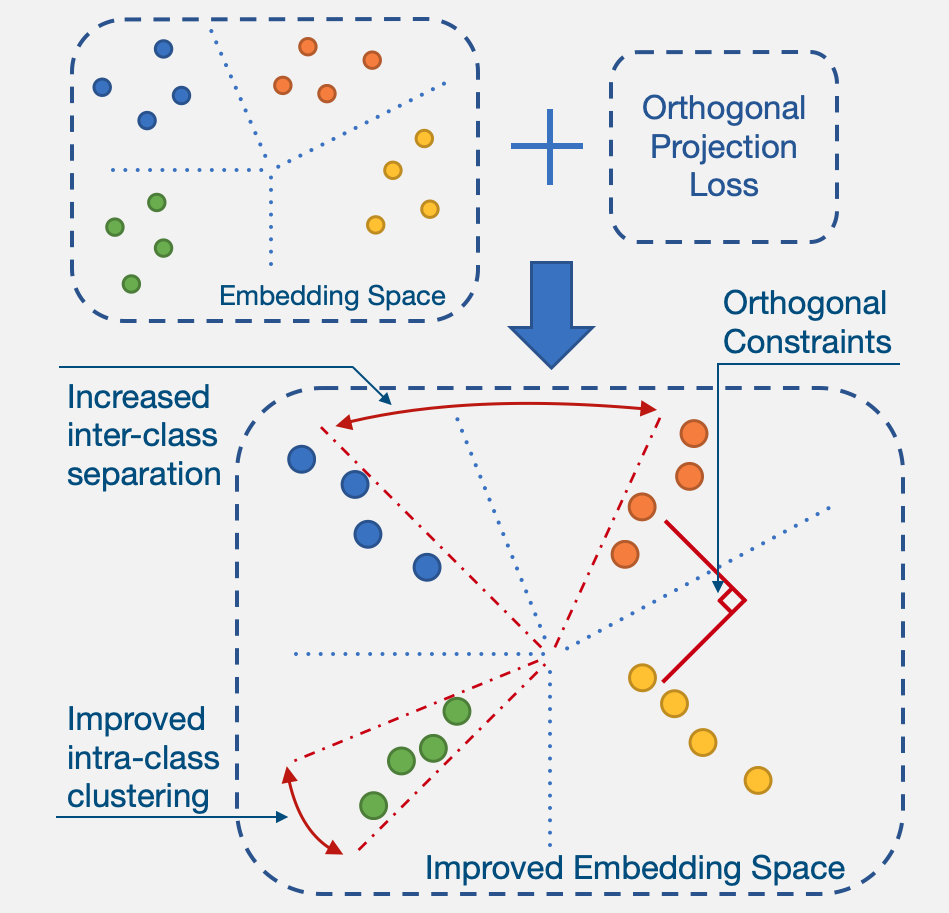

During training of a deep neural network, within each mini-batch, OPL enforces separation between

features of different class samples while clustering together features of the same class samples.

|

|

|

During training of a deep neural network, within each mini-batch, OPL enforces separation between

features of different class samples while clustering together features of the same class samples.

|

|

Deep neural networks have achieved remarkable performance on a range of classification tasks, with

softmax cross-entropy (CE) loss emerging as the de-facto objective function. The CE loss encourages

features of a class to have a higher projection score on the true class-vector compared to the

negative classes. However, this is a relative constraint and does not explicitly force different class

features to be well-separated. Motivated by the observation that ground-truth class representations in

CE loss are orthogonal (one-hot encoded vectors), we develop a novel loss function termed “Orthogonal

Projection Loss” (OPL) which imposes orthogonality in the feature space. OPL augments the properties

of CE loss and directly enforces inter-class separation alongside intra-class clustering in the feature

space through orthogonality constraints on the mini-batch level. As compared to other alternatives of

CE, OPL offers unique advantages e.g., no additional learnable parameters, does not require careful

negative mining and is not sensitive to the batch size. Given the plug-and-play nature of OPL, we

evaluate it on a diverse range of tasks including image recognition (CIFAR-100), large-scale

classification (ImageNet), domain generalization (PACS) and few-shot learning (miniImageNet, CIFAR-FS,

tiered-ImageNet and Meta-dataset) and demonstrate its effectiveness across the board. Furthermore, OPL

offers better robustness against practical nuisances such as adversarial attacks and label noise.

|

|

|

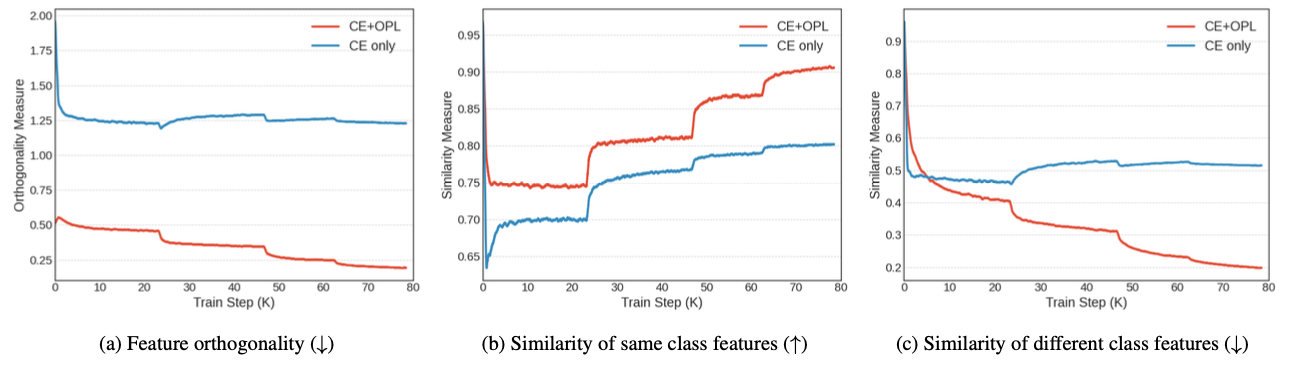

Feature Analysis: We compare feature orthogonality as measured by OPL and feature similarity as

measured by cosine similarity and plot their convergence during training. Feature similarity is

initially high because all features are random immediately after initialization. OPL simultaneously

enforces higher inter-class similarity and intra-class dissimilarity in comparison with the CE

baseline.

|

|

Orthogonal Projection Loss In ICCV, 2021. (hosted on ArXiv) |

Results across tasks |

| Task | Dataset | Baseline | OPL | Metric |

|---|---|---|---|---|

| Classification | CIFAR-100 | 72.40% | 73.52% | acc@1 |

| Classification | ImageNet | 78.31% | 79.26% | acc@1 |

| Few Shot Classification | CIFAR-FS | 71.45% | 73.02% | 1-shot |

| Few Shot Classification | MiniImageNet | 62.02% | 63.10% | 1-shot |

| Few Shot Classification | TieredImageNet | 69.74% | 70.20% | 1-shot |

| Few Shot Classification | MetaDataset (avg) | 71.4% | 71.9% | varying shot |

| Domain Generalization | PACS (avg) | 87.47% | 88.48% | acc@1 |

| Label Noise | CIFAR-10 | 87.62% | 88.45% | acc@1 |

| Label Noise | CIFAR-100 | 62.64% | 65.62% | acc@1 |

| Adversarial Robustness | CIFAR-10 | 54.92% | 55.73% | acc@1 |

| Adversarial Robustness | CIFAR-100 | 28.42% | 30.05% | acc@1 |