Action Recognition in Videos

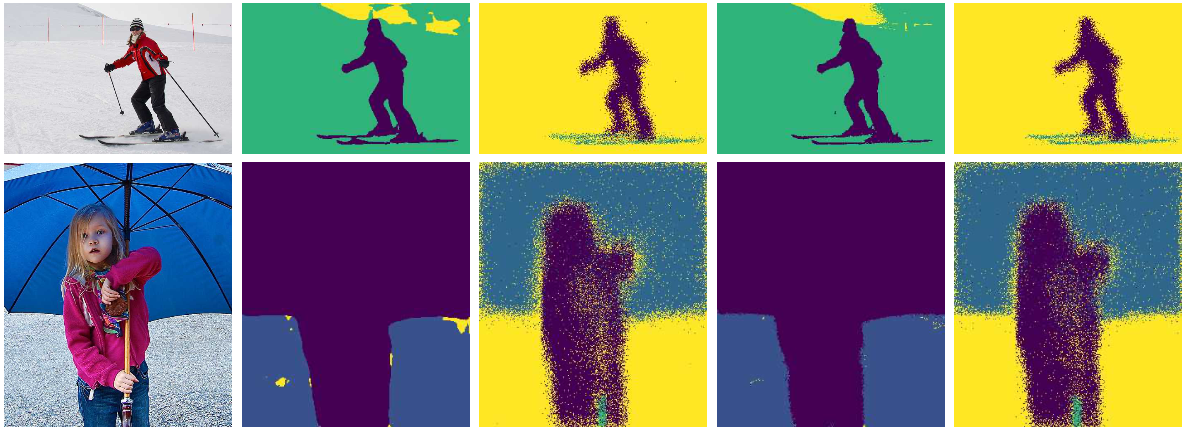

The project focused on researching on action recognition in videos. We explored individual contribution of static and motion domain information for action recognition and succeeded in establishing the presence of optimal contribution ratios. Using Cholesky transformations based control of correlation to parents of fused feature vectors, we managed to experimentally validate our findings. Additionally, we explored the role of underlying temporal trends of video sub-events in recognizing compound actions. Tasked with modelling that temporal trend, individually I experimented with recurrent neural networks (RNNs) considering their greater representation capacity, and established their optimality over traditional techniques for our use case of modelling temporally evolving feature vectors. This work resulted in two peer-reviewed publications at DICTA 2017 and TCSVT 2019. Code for the RNN component of this system is available here. Read more